Hey there, been a minute 👋

It’s been a while since my last blog post! If you’re an old subscriber you might remember that during my first blog post I said I’d build 12 startups in 12 months… and then I just kind of disappeared around month 7.

I bet you’re sitting here expecting some kind of elaborate explanation in terms of why I was gone, but the reality is that around month 7 I had a couple of my ideas start to make some money so I decided to focus on them.

The “12 startups in 12 months” thing isn’t meant to be taken literally: you build new ideas until something starts to show some promise, then you focus. Which is what I did.

I also got a bit burned out of blogging. I’ve decided I’m going to keep blogging, but only when I feel like there’s something truly interesting to share related to my journey of building startups.

This month, I do have something interesting to share: a “buyers guide” and how-to on self-hosting!

And it’s going to be a long one because I put a lot of research into this, so if you find some value out of what I’ve written here:

Here’s a link to ☕️ buy me a coffee and show your support!

Self-hosting? What’s that all about now?

This month’s post is all about self-hosting for people building software startups, otherwise known as the “cloud exit,” cancelling your Vercel account and buying a low cost VPS instead, or whatever else you’d like to call it.

The general idea behind this is that if you’re a self-hoster, you deploy and maintain your own apps without the “help” of certain products that attempt to do that for you.

Now let’s go through some objections I hear a lot:

“Why would you want to do that? Vercel/AWS/etc are all fine and helped me scale quickly.”

Because products that supposedly “help” you often hurt you in the long run: once you start to achieve any degree of scale, you can end up with surprise bills from the likes of Vercel, AWS, or any other company that has usage-based pricing.

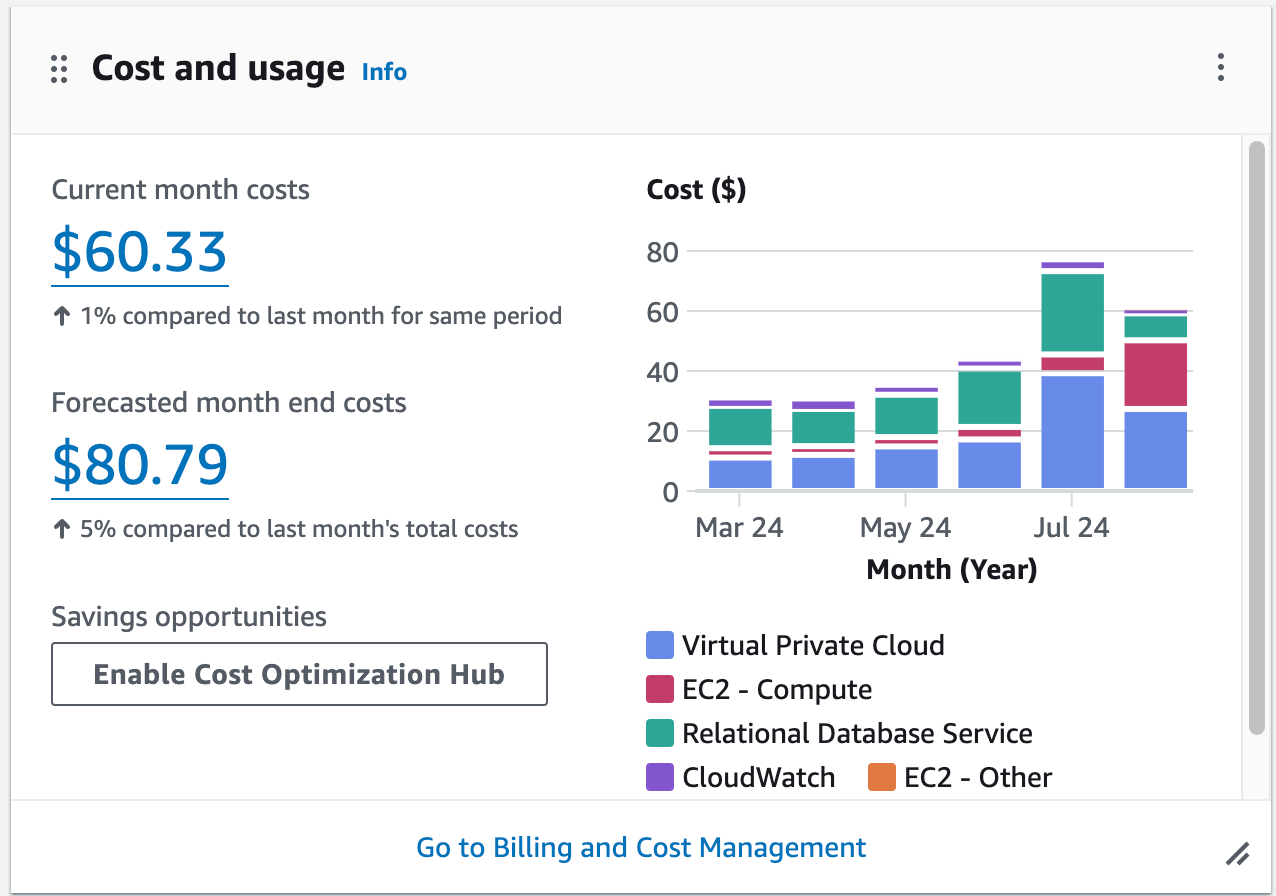

Just to give you an example, my AWS bill is expected to be over $80 this month just to host two simple apps:

My real AWS bill for this month. I’m not using anything crazy, just standard EC2, Lambda, and Postgres on RDS. Turns out Bezos’ yacht doesn’t run itself.

That’s insane considering neither of my apps get any significant amount of traffic at this point and I’m using some of the cheapest virtual machines available on the platform.

Compare that to Hetzner who offers a powerful virtual private server (VPS) with 2 Cores and 4 GB RAM for a grand total of 3.85 EUR/month 🤑

When the alternative is that cheap, AWS is a ripoff unless you have unlimited VC money or credits to burn.

“Ok, makes sense - but self-hosting is too hard and I don’t have time!”

You might think that, but it’s total nonsense. There’s this myth that self-hosting requires an entire operations team to help you, it’s too much work, or any other number of excuses.

The reality is that with a cheap VPS provider and the right technology choices, you absolutely can and should deploy your own apps with very minimal ongoing maintenance, and I’ll show you how to do it the right way in this post.

“But I have cloud credits to spend anyway.”

Cool, then spend them! I’m not trying to tell you to spend unnecessary money, so milk AWS and Vercel for all they’re worth. Then come back to this post when you’re ready to spend your own money.

Now that I’ve handled all of your objections, read on to understand how to do this - as I said, the right way.

As you might realize already, this isn’t going to be one of those blog posts where the conclusion says that “tHe SoLuTiOn DePeNdS oN yOuR uSe CaSe” - nope, I have strong opinions about this and I think there’s a good way to go about it for most people and well, here it is.

Some assumptions

Let’s start with some assumptions about the type of people who will definitely find my method of self-hosting useful.

You’ll probably find value out of this if you’re a solo founder or a small team and:

You have existing app(s) deployed somewhere like AWS or Vercel and hate your monthly bills.

Or you’re just starting out and don’t want to get shafted by usage-based pricing at any point.

Or you just like doing things yourself.

I’m a little of box 1 and box 3 myself. No shame if you’re just box 3!

You should also have an app (or be planning on building one) that has some sort of web API that is deployed via Docker.

You should also know how to run bash commands and create files. If you don’t know, ask ChatGPT how to do it.

Finally, if you’re doing something crazy with GPUs or you’re building the next Google, you will have further considerations that I won’t cover here - and if you think you’re the next Google, you need your head checked anyway.

But do we have to 100% self-host everything?

Ok, this is all about DIY and self-hosting, but I have to admit something upfront: while you could do absolutely everything yourself - and I used to do that myself with PHP + Apache in 2009 - these days I’m convinced that self-hosting particularly as a solo developer is made much easier and maintainable over the long run by solutions that are halfway in between 100% DIY and a full-blown Kubernetes Cluster.

The latter does require an operations team to manage especially at a high degree of scale, and that’s not what you want.

The former won’t be as crazy, but you will still have to spend time doing research on which reverse proxy you want to use (nginx vs. caddy vs. traefik vs. any number of other solutions), how to set up logging, how to integrate with CI, limiting the disk space that your logs can use, how to make sure apps automatically restart when the host/process dies, picking a linux distro, and generally manually configuring things when you could be building apps.

With that said, I think most people should use an open-source, minimal, self-hosted “platform as a service” - otherwise known as a PaaS - to help alleviate some of the above config and maintenance burden. This is the middle ground solution that I’m referring to above.

What’s a self-hosted PaaS? Think Heroku, AWS Elastic Beanstalk, or Google App Engine, but you host it yourself. If that sounds complex, it’s really not so bad. I’ll show you how to set it up below.

For your database if you have one, keep it simple and use SQLite unless you have a reason to use Postgres like I do. For the purposes of this blog post though, I’m assuming SQLite and no external dependencies for data storage.

But first, some requirements.

Now, let’s think through requirements for open-source PaaS software that can help us with our self-hosting goals.

These are the requirements I had in mind as I evaluated vendors as someone who has been deploying software professionally for over 10 years, and I think you should borrow these for your own app too:

0️⃣ Zero downtime deployments

This means when you deploy your API for example, outstanding HTTP requests can finish executing without being abruptly cut off. Failing requests = sad customers 😢 - think of the customers and keep uptime to a maximum!

🤖 Some way of automatically managing app processes running on a single host

Critically, when you deploy a new version of the app, traffic should be automatically and cleanly routed to the new app. You should also be able to increase or decrease the number of processes of each app.

Single-host deployments need to be supported, meaning you can deploy multiple apps to one beefy VPS without any hacks or compromises.

🏎️ Very low resource consumption (absolutely zero at idle) and very few or zero dependencies by default.

Some dependencies might be okay, like Docker and SSH. Those are things most people already have installed so they’re not a big deal.

But this is not rocket science! We don’t need to add a Postgres server, Redis instance, a websocket server, Kubernetes, or anything fancy just to deploy an app to a host.

Besides, adding dependencies opens you up for security vulnerabilities and maintenance headaches (someone needs to keep your dependencies up-to-date, and now that responsibility is on you)

✅ Well-tested, clearly documented, proven software that has been around for several years or more

Most times when I break this rule and try something “new and shiny” because it sounds cool I regret it. “New and shiny” usually means poorly documented and full of time-wasting gotchas with very few exceptions.

As a very important bonus, stuff that has been around a while will be indexed by OpenAI and Anthropic - so you can find help by asking ChatGPT if you get stuck. It’s a huge productivity boost and like having your very own customer support agent/expert in the room with you.

😌 Sane defaults and excellent ease of use through simple commands + CI integration

The PaaS we choose should “just work” and not require us to do too much complex configuration to get an app deployed. It should be usable in a CI pipeline.

Commands for managing apps should be able to be done through SSH or through a standard bash command. This is key because scripting and automation becomes much more possible when you can perform maintenance using commands instead of UI button clicks. A UI is a nice to have and not required.

Overall, it should be resilient to failure (survives node restarts, app restarts, etc), get out of our way, and do what it’s supposed to do.

Self-hosted PaaS Options

Browse X or do a few Google searches, and you’ll find a lot of different options that claim to help you with the above requirements:

Kamal: new software, first released in 2023 and built by the 37signals team behind Ruby on Rails and Basecamp.

Dokku: first release way back in 2014! Originally released as an alternative to Heroku and now has a lot of modern features including deployment of Docker containers.

Caprover: first release was in 2017. I see this as somewhat similar to Dokku, but with more of a focus on managing things through a UI.

Coolify: first released in 2021 and built by a solo founder named Andras who seems like a nice guy.

K3s: first released in 2019, a “lightweight” version of Kubernetes that is meant to be run on resource-constrained environments. Originally developed by Rancher Labs and now seems to be a product of SuSE?

At this point, you’re probably thinking: “wow, that’s a lot of different options - overwhelming”

Good thing I tested every single one of these for you so you don’t have to!

It goes without saying that none of the authors of any of these tools paid me a dime to do this analysis and I have nothing to sell you related to any of these.

So, if you appreciate this kind of thing and want to show your support, here’s a link to buy me a coffee. I massively appreciate it!

With that out of the way: it’s time to get to the test results! But first, some setup notes…

Test machine specs

For testing, I used several identical VPS nodes with the following setup with one PaaS deployed to each:

Host: Amazon Web Services

Region:

us-east-1EC2 Instance type:

t4g.medium2 vCPUs (arm64)

4 GB RAM

30 GB Storage

OS: Debian 12

Networking: IPv4 + IPv6 (dual stack)

Port speed: 5 GB/s

These are pretty much the kind of specs I’d recommend in production as well, although I would not use AWS for obvious reasons. Production specs will be covered in more detail after the test results below.

The test results

Well then, how did all of these different PaaS apps perform?

I’ll just come out and say it: I cannot recommend 4 out of the 5 in good faith.

Here are the results:

Coolify

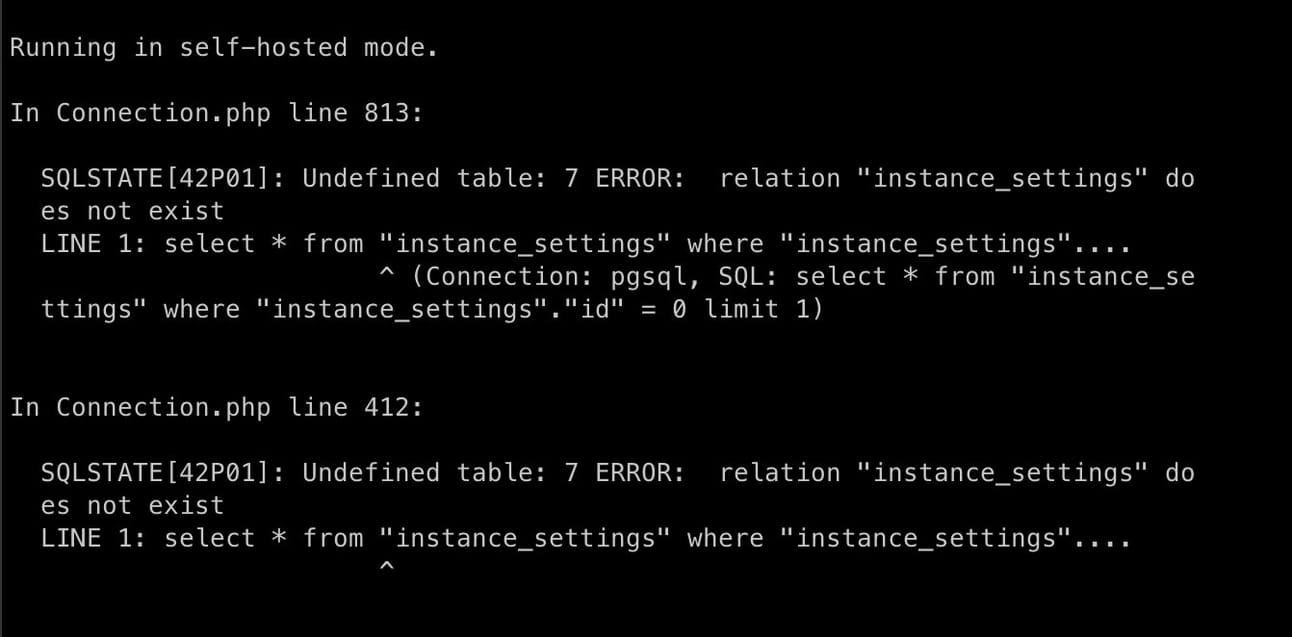

Coolify has a nice looking marketing page and seemed like it was worth a test. I did a fresh install as recommended by the docs, then rebooted to see how it would handle a node restart. Well, it didn’t:

NOTE: as of 9/3/24, @heyandras let me know this problem has been resolved (thanks Andras for fixing it!):

https://x.com/heyandras/status/1830689101873328617

The nice error message I was greeted with after rebooting. It’s apparently fixed now, thanks Andras!

Then I noticed more errors in the logs when trying to understand what was going on:

Did the author write this healthcheck properly? “I am alive!” is considered an error response?

Also, I really, really, really was not a fan of all of the bloat it installed on the server after a fresh install with nothing running. See here:

PHP and Redis and Websockets and random containers oh my 😬

That’s over 10% of your RAM gone for no reason at all, plus a few percentage points of CPU utilization that shouldn’t be there.

I say “gone for no reason at all” because you don’t need any of this stuff to deploy an app to a server, I promise.

Here are some of the docker images it uses as dependencies including a 2 year old version of Postgres that is in need of an update. See what I mean about maintenance headaches when you add dependencies?

To be clear, these extra dependencies is probably why Coolify’s docs “don’t recommend” installing Coolify on the same server the apps run on.

But that solution is really not ideal either - now I have to run Coolify on my local machine, pay for Coolify Cloud, or buy two VPS nodes just to get my apps deployed on one VPS.

At this point, I nixed Coolify because of the reliability issue above and the unnecessary resource usage when running everything on one node, which is my entire goal here. Additionally, I couldn’t find docs about running commands to manage Coolify - only using the UI - so that was a dealbreaker too.

Kamal

I really wanted to like Kamal because it’s basically just a wrapper around Docker and every command runs over SSH. Nice and simple - in theory.

Unfortunately, the tl;dr in practice is that it’s too new, the docs are lacking, and errors you might run into are hard to diagnose. ChatGPT doesn’t have much accurate info about it either.

NOTE: I regret that I seem to have lost all of the screenshots I took while testing Kamal, so you will have to take my word for it on this one.

I’ll also say that I know people who deploy using Kamal successfully - mostly with Ruby apps, but I experienced many “gotchas” with this tool. I didn’t like several things about it:

(Minor) You need to run a separate

kamalutility on your local machine which requires a ruby install. If you don’t want a ruby dependency, you can use Docker - but then certain commands that rely on stdin/out don’t work properly as those don’t get passed to the kamal cli container properly. Gotcha!(Minor) You have to define a kamal-specific deployment config file. I would have ideally wanted to see a very simple GitHub actions integration and no extra config beyond that.

(Minor) It had a weird method of managing environment variables where I had to define them in multiple places before they got uploaded to the host.

(Major) Extremely verbose logging to the point where it’s nearly impossible to understand what’s happening if something goes wrong. The deployment log was impossible for me to parse.

(Major) UPDATE: This concern is solved in Kamal 2 where the healthcheck is done the correct way - externally. This is only an issue with Kamal 1.

Poorly thought out healthcheck mechanism. It depends oncurlexisting in your container, which means you cannot easily use “minimal” docker images that lack this utility. I would have wanted to see Kamal itself do the healthcheck without any strange dependencies on the app container, which would be more accurate in cases where the app container is defined with an incorrect network config or something along those lines.(Major) Above all, the tool simply didn’t work for me. When I finally got the app deployed after a few hours of struggling with various gotchas, I thought I had “won.” Then I went to deploy a new version of the app with a simple, non-breaking change and Kamal refused to switch traffic to the new container with some hard to understand error message.

I fixed #4 by switching my base image to a full debian image with curl installed, but that caused my image size to balloon by 10x (I was previously using a “distroless” base image), so that wasn’t a workable solution.

The only thing that fixed #5 for me was wiping the host and doing a fresh kamal setup at which point it started working again on the first deploy…until I deployed another new version of the app again, and so on.

Since I never had a successful test on Kamal and the product did not work as advertised due to #5, I’m not going to include further detail here.

As a positive, Kamal’s resource consumption was quite good at idle (nothing extra running), but I think it needs a lot of work on documentation and error handling to be a viable choice. It doesn’t matter if it’s the most efficient tool in the world if it doesn’t work.

Kamal 2 is coming out soon which sounds promising, but it’s not here yet (UPDATE: Kamal 2 is out. Let me know if you want to see a review and I’ll update this blog post) and there’s better options available today.

Caprover

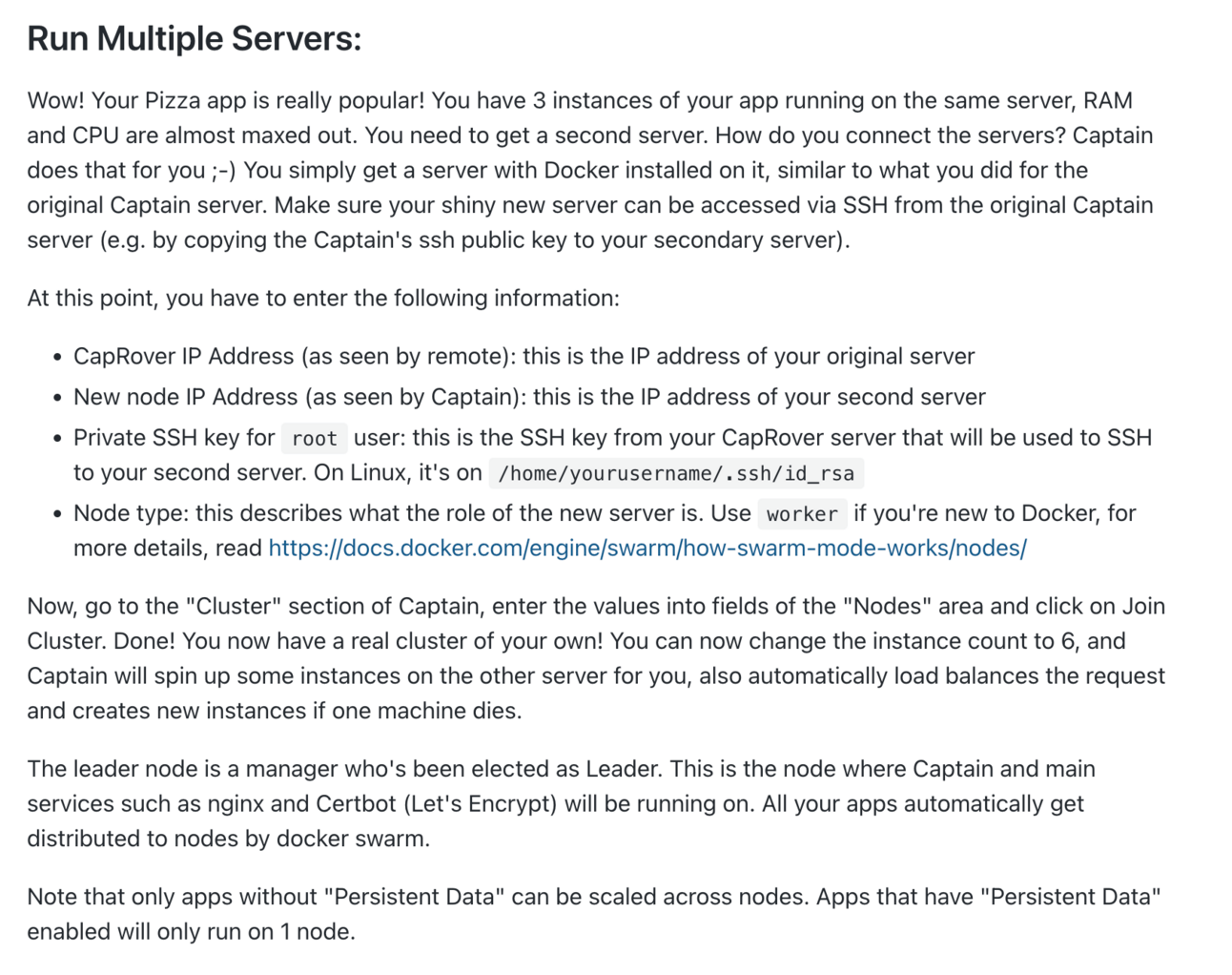

Next, I looked at Caprover. This one was eliminated when I looked at the docs:

This is worrying. First, it doesn’t fit my need of being able to do things on the command line to have to log into a UI and manually input fields to scale out a deployment.

Second, it appears that some sort of custom leader node election algorithm has been implemented - and not enough detail has been provided.

These are not easy things to implement correctly - and I don’t have confidence the author did it correctly if there are no details here on how exactly it was implemented.

I also didn’t like how the maintainer of the project conducted himself when presented with a problem about one of the dependencies in the project:

Weird, condescending tone in his response. Not a fan of working with people like this.

At this point, I nixed Caprover. Mostly because of the lacking documentation and inability to use the command line to perform scaling actions, but this interaction didn’t help.

K3s:

K3s basically did what it promised and the docs are quite extensive. Bravo to the team maintaining K3s.

If you run the install command as described, you get a ready-to-use K8s instance running on fewer resources than you’d expect from a standard K8s deployment which you can confirm with kubectl get node :

I forgot to take a real screenshot of this part, but here’s what it looks like: imagine just one line instead of 3 and 127.0.0.1 instead of node1.example.com.

I hard rebooted the machine right after this and it came back up without any issues.

Unfortunately though, K3s suffers from resource usage problems while idling. Even though it bills itself as lightweight, and it is compared to K8s, it’s really not so lightweight overall:

That’s 17% of your RAM and 4.3% of your CPU being used for…not sure what. metrics-server is also included in the calculation.

It’s also not ideal for ease of use in a CI environment. To deploy a docker image to the cluster, you need to define a fairly wordy yml file every time you deploy and pass it to kubectl apply -f :

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.21.1

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancer

This can be automated by creating a template file and substituting the image name with the current version to deploy among other properties, but this requires extra time to get set up.

I also see the number of dependencies involved here as a negative, even if they happen to be packaged together:

Good luck fixing anything on your own if any of these components malfunction! That’s a lotta components.

Last but not least: Dokku 🏆 (Winner!)

Finally, I got to Dokku.

As soon as I installed Dokku, it became obvious that this was the first self-hosted PaaS I had tested that really prioritized all of the things I was looking for.

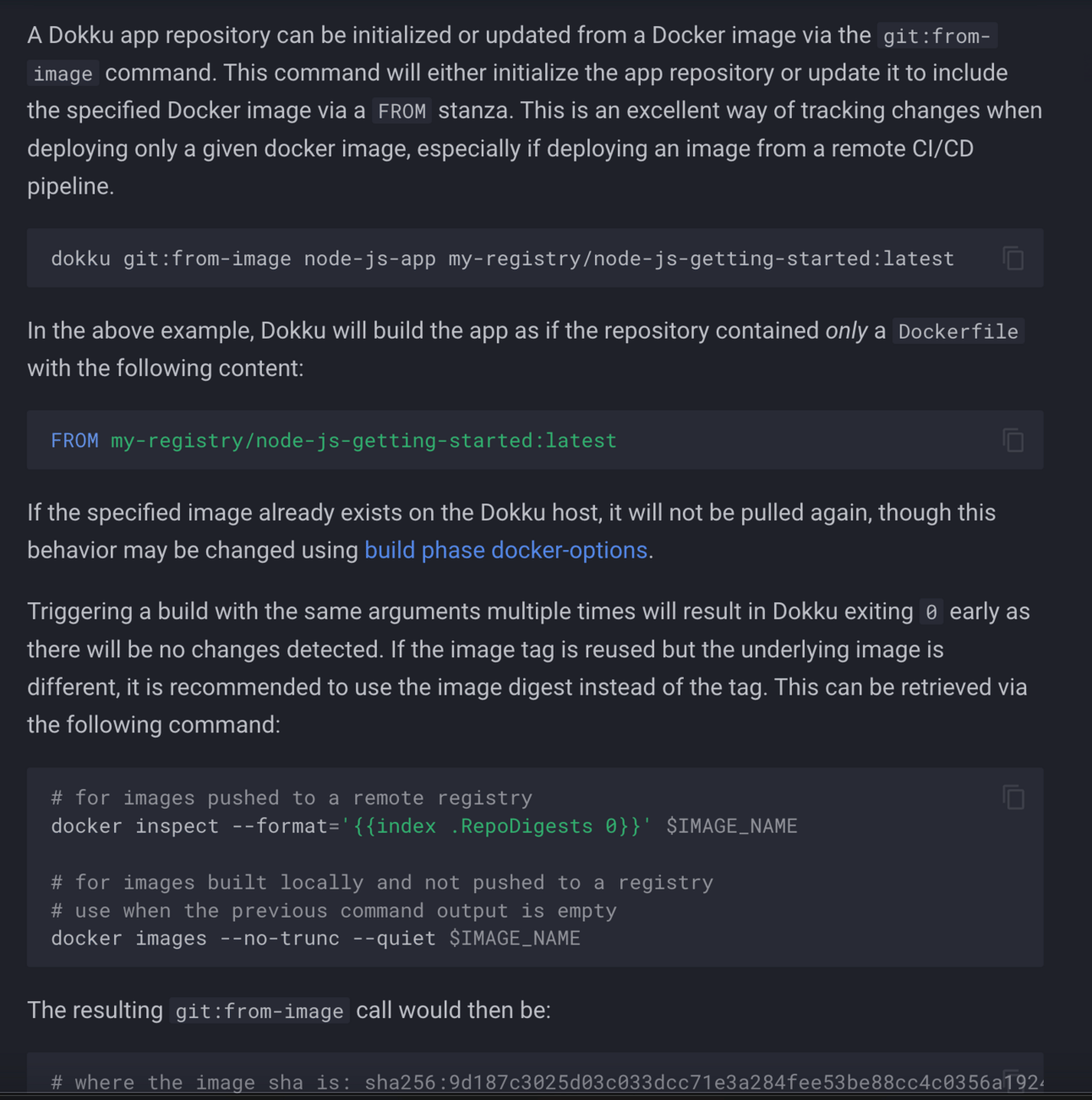

First, the docs are truly great. I won’t go into it too much here, but here’s one selection from the docs about how to deploy a docker image to the host:

Notice how much simpler it is than fighting with some giant k8s yaml file. Just one command!

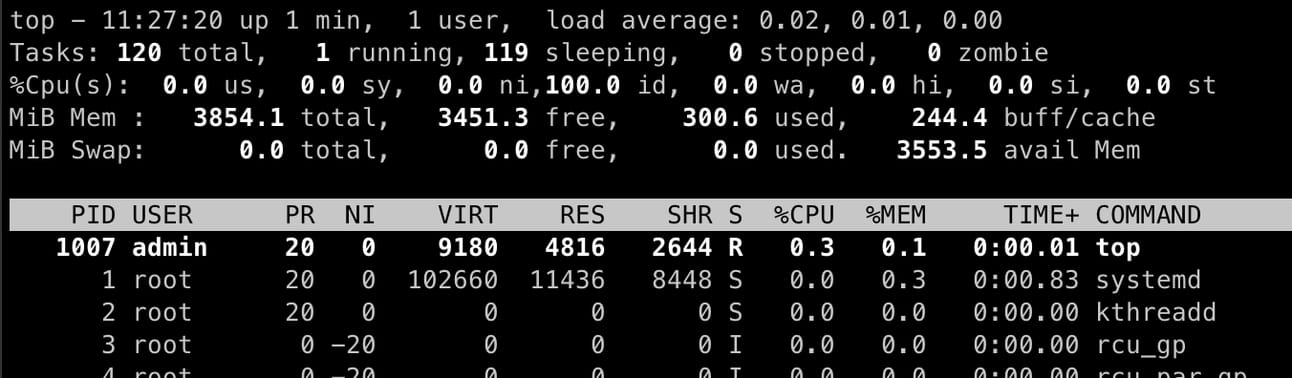

Secondly, after a fresh install, look at the list of running processes:

It’s a great sign when the highest CPU usage comes from my own top command. Look ma, no memory usage either!

This is how it should be done. No extra bullshit, no unnecessary containers. A PaaS that gets out of the way when it isn’t needed!

I then took a look at the dokku executable that gets installed on the host and it turns out it’s a fairly easy to read bash script without any weird dependencies:

Here’s what the script looks like. It eventually just invokes docker for commands you might run on your apps.

Again, perfect. Then I noticed Dokku had a prebuilt Github Actions plugin to deploy an app.

I spent about an hour setting this up, mostly because I didn’t read the docs and forgot to configure docker registry credentials, but it was quite easy to get going with a bit of Terraform magic that I’ll include for everyone below.

At the end of the day, I just needed to add one block to my deploy script:

This is all I had to add to get the deploy working after I followed the setup steps in the docs.

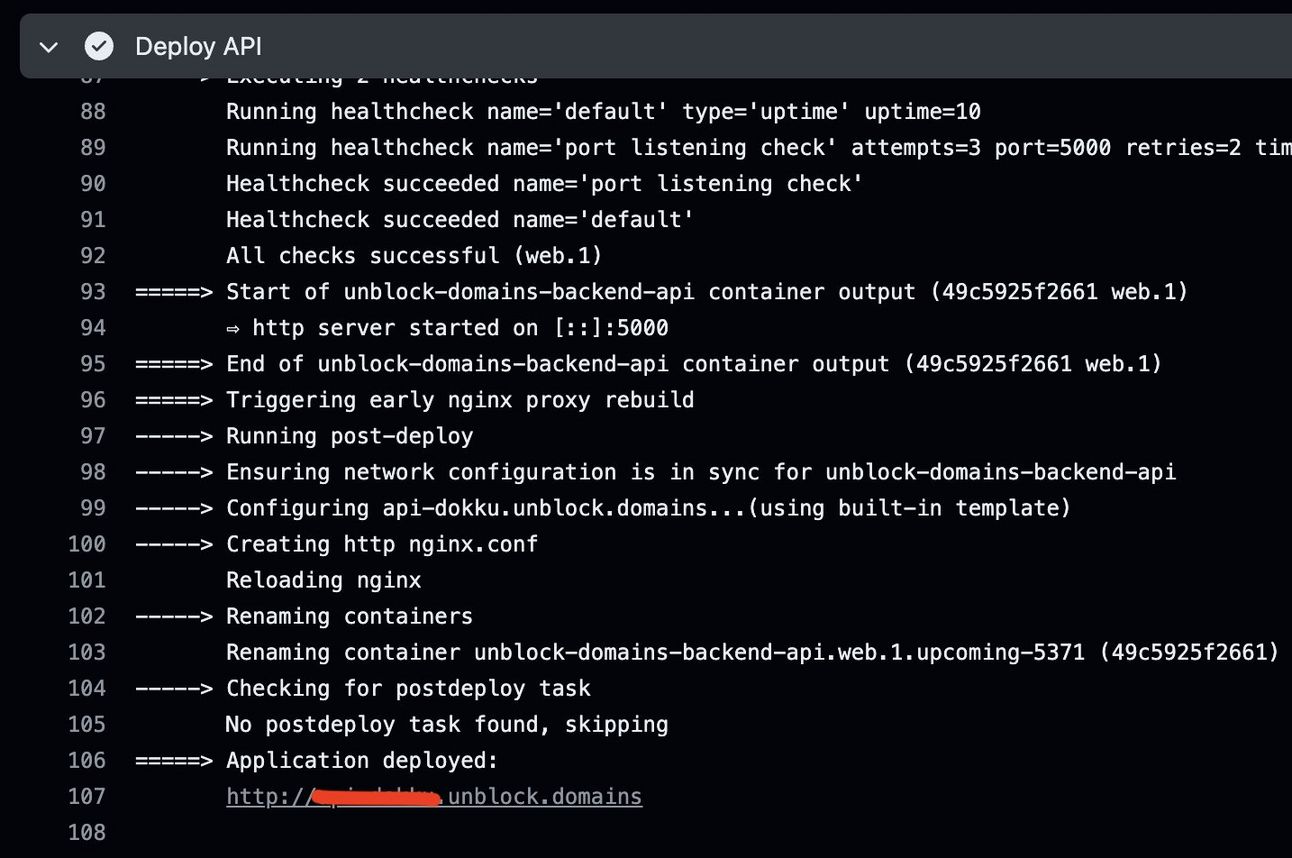

After minimal fuss, my app was deployed, and I was happy to see some sane defaults: nginx is very resource efficient and battle-tested as a reverse proxy, and I liked that it included output from my app so that I could see the deploy succeeded:

This kind of output is something no other solution got right. Kamal in particular had about 10x the output for any command that was run and it was completely impossible to parse and understand, whereas Dokku’s output is clear.

Recommended specs and OS for a production VPS

Now that you’ve seen my strong preference is to use Dokku to host my apps, let’s cover the specs for an ideal virtual private server (VPS) for this purpose.

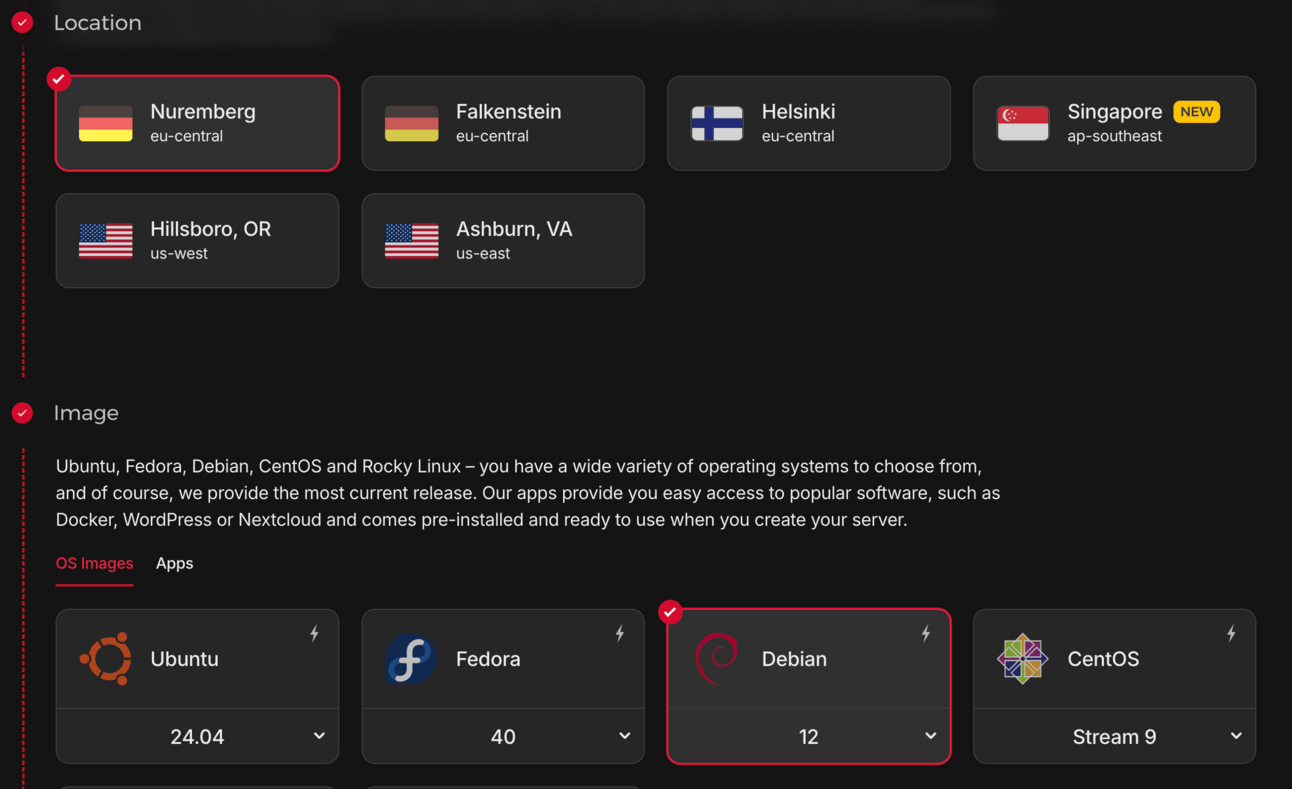

In production, I’d opt for an instance on Hetzner: go to https://www.hetzner.com/cloud/ (not a referral link), select Shared vCPU Ampere, and select the CAX11 node type.

That’s the best bang for your buck since you’ll get 2 vCPU Cores and 4GB RAM for less than 3.79 EUR (or about ~$4.30 US). Hard to beat that kind of deal!

You could also use any of Hetzner’s AMD or Intel-based nodes which would be fine and may be closer to your customers if you have US-based customers, but I prefer arm64 since my development machine is a Macbook Pro with an arm64 processor and I like my development machine to match my production machine in terms of CPU architecture. Most of my customers happen to be in the EU anyway.

This setup with arm64 in dev and in production lowers the chances of some difference in behavior between those environments. I also like that arm64 is cheaper of course.

A note on the OS: I recommend Debian.

It also can automatically install security patches for you which is a key thing to have as a solo server admin, and it’s a reliable, minimal Linux distro that only includes the necessary software with very stable versions of said software.

As an endorsement: I’ve used various versions of Debian in production with zero issues for many years now, and a previous company I worked for (with a market cap of tens of billions of dollars) ran 100% of its servers on Debian, also with no issues.

Now for a note on IPv4 vs. IPv6: it may seem tempting to save money and go for an IPv6-only node because Hetzner will give you a further discount. Do not do this!

Why? Well, many websites don’t support IPv6 yet, and you will run into connection errors if you have a dependency on a 3rd party website that doesn’t. You might not even realize that you have a dependency on one of these sites and run into some very hard-to-diagnose bug as a result.

For example, github doesn’t support IPv6 yet and neither does X. You can confirm which websites support this by issuing a dig command.

dig AAAA x.com +shortIf the above command doesn’t have any output, the website in question does not yet support IPv6 and any requests made to it from a host with only an IPv6 public IP will fail.

Deploying Dokku in Production as a solo developer

If you don’t feel like reading the the docs which are quite good, here’s my own guide on how to get Dokku deployed to a Hetzner node in production:

First tap “Add Server”

Then select a location close to your customers and Debian as the OS

Finally select Ampere as the node type and pick the cheapest one. Notice the great deal: 3.79 EUR/mo 👍

Finish the rest of the wizard, and log into the node with SSH ssh [email protected].

Then paste this bash script below. Important notes:

1. Make sure to edit it with you SSH public key from your laptop, and another one for your CI system if any.

2. Also make sure to add your app’s environment variables if any under “make sure to set your app config here”

To generate a public key, just use:ssh-keygen -t ed25519 -C "github-actions"

And copy the output from the .pub file that it put in your ~/.ssh folder into the script below “ADD YOUR KEYS BELOW!”

#!/bin/bash

# Ensure latest updates are installed

apt-get update -y

apt-get upgrade -y

# Install Dokku via official install script (make sure to find the latest version on dokku.com and use that as the DOKKU_TAG and url for wget)

echo 'Installing Dokku...'

wget -NP . https://dokku.com/install/v0.34.9/bootstrap.sh

sudo DOKKU_TAG=v0.34.9 bash bootstrap.sh

# Add SSH keys to Dokku so our dev machine + CI tool can run Dokku commands. ADD YOUR KEYS BELOW!

echo 'Done installing Dokku. Adding SSH keys...'

# Example: echo 'ssh-ed25519 AAAA... your-laptop' | dokku ssh-keys:add your-laptop

# Example: echo 'ssh-ed25519 AAAA... github-actions' | dokku ssh-keys:add github-actions

# Set up Dokku apps and credentials

echo 'Done adding SSH keys. Creating apps...'

dokku apps:create your-api

echo 'Done creating apps. Configuring app domains...'

dokku domains:set your-api api.yoursite.com

echo 'Done configuring app domains. Starting vector for logging...'

dokku logs:vector-start

wget -O /var/lib/dokku/data/logs/vector.json ${VECTOR_SINK_CONFIG_URL}

echo 'Done configuring vector. Authenticating with Docker registry...'

dokku registry:login ${DOCKER_REGISTRY_HOST} ${DOCKER_REGISTRY_USERNAME} ${DOCKER_REGISTRY_ACCESS_TOKEN}

# Make sure to set your app config here if any

# dokku config:set your-api SOME_APP_SECRET1=value1 SOME_APP_SECRET2=value2

# Reboot and finalize installation

echo 'Done with everything, waiting 30s before rebooting to finalize installation.'

sleep 30

systemctl rebootFor logging, you can either comment out the dokku logs line to disable exporting logs, or sign up for a free account with Better Stack to store 3GB/month of logs for free.

If you do choose to use Better Stack, they support Dokku natively so just create a new log source and they will give you a command to set up the log source with Dokku

The create source UI with Dokku selected

You will need to run the script like this:

DOCKER_REGISTRY_HOST=ghcr.io DOCKER_REGISTRY_USERNAME=username DOCKER_REGISTRY_ACCESS_TOKEN=ghp_... VECTOR_SINK_CONFIG_URL="https://urlfrombetterstack.com" ./init.shWhen run, this script will take about 10-15 minutes to finish and:

Install Dokku which will use nginx as a reverse proxy for all your apps by default. You shouldn’t need to configure this at all.

Pre-create all of your apps. Dokku will auto create any apps that don’t exist when you deploy for the first time, but it’s better to pre-create them so you can set the domain for each app upfront and have your app work immediately.

Set up Dokku to be able to pull images from your Docker registry

Set up logging with export to Better Stack (nice UI for viewing your app logs in a web interface and to create alerts)

Reboot the VPS (so don’t be alarmed if you get disconnected)

Wait the full 10-15 mins for the process to complete and the node to come back online. To test that the process worked, run this command on your local machine:ssh dokku@yourserverip version

This will use the SSH key you generated above. And you should get an output showing you the version of Dokku running on the server.

Now update your CI script (hopefully you’re using GitHub Actions, it makes this part easier) to include one additional command which includes the SSH private key you generated above as a GitHub Repository Secret:

- name: Deploy API

uses: dokku/[email protected]

with:

ssh_private_key: ${{ secrets.SSH_PRIVATE_KEY }}

git_remote_url: ssh://dokku@yourserverip:22/${{ github.repository }}-api

deploy_docker_image: ghcr.io/${{ github.repository }}-api:${{ github.sha }}Run the deploy and you should see a message showing you that your app was deployed to Dokku at the URL you set. You’re done with deployment!

Security and Hardening

Now that your app has been deployed, it’s time to think about how to harden it so that attackers will have a tougher time…well, attacking you.

The first recommendation I always give to people is to set up a Cloudflare account.

Putting Cloudflare in front of your domains prevents a whole class of DDoS attacks and Cloudflare is able to cache certain responses for you to save you bandwidth.

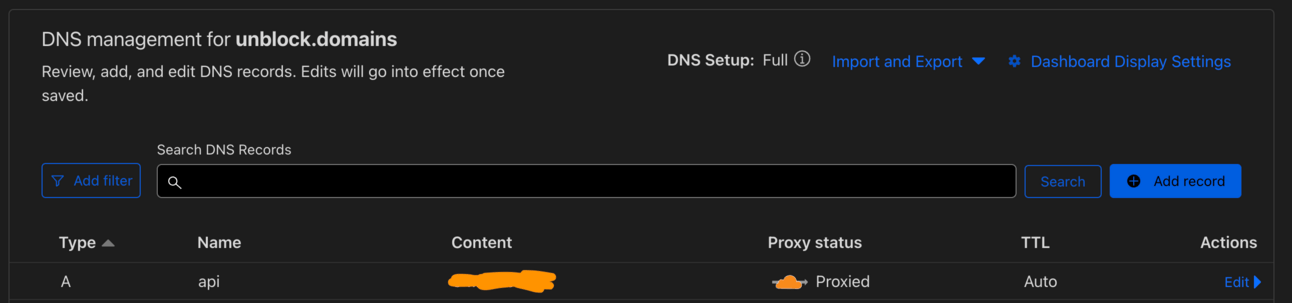

After you set Cloudflare up, go to the websites tab and add your app’s website, then edit the DNS settings:

In the above screenshot, you’ll want to create an A record with the IP address pointing to your VPS’ IP address with Dokku on it and make sure “Proxy status” is proxied (orange cloud icon).

Then, back in your Hetzner control panel, create a firewall rule that only allows Cloudflare IPv4 and IPv6 addresses (copy and paste both sets of IPs from here: https://www.cloudflare.com/ips/) to access your VPS on port 80 (the web port that Dokku’s nginx instance is serving traffic on):

Firewall setup example

Then scroll down to Apply to and make sure you select the server you just created

Then hit Create Firewall.

The effect of this is that only cloudflare IP addresses will be able to communicate with your VPS on port 80. This means attackers will be completely blocked from hitting your VPS’ IP directly, which would otherwise bypass Cloudflare.

You might also consider adding a rule that only allows GitHub actions CI IP addresses and your home IP to access port 22 for SSH.

Finally, to lock down your SSH server even further, make sure to disable password auth. This should already be configured as most Debian images provided by hosting providers do this already, but good to double check:

This will only allow users with valid SSH keys to connect and avoids the problem where attackers attempt to brute force passwords.

Conclusion

That’s all we have folks! Thanks so much for reading either way. If there’s any other self-hosting tools you’d like me to review, leave me a comment and I’ll update this blog post accordingly.